How to Diagonalize a Matrix: A Comprehensive Guide

Matrix diagonalization is a foundational topic in the realm of linear algebra and finds its applications in a plethora of mathematical and real-world scenarios. At its core, the process of diagonalization transforms a given square matrix into a diagonal form by means of similarity transformations. But why is this transformation significant?

The Power of Diagonal Matrices

Diagonal matrices have a unique property: their only non-zero entries lie on the main diagonal. As a result, calculations involving these matrices—like matrix multiplication or raising to power—are greatly simplified. If we can represent a more complex matrix in a diagonal form (or close to it), we can harness these computational advantages.

The Concept of Similarity

For a matrix to be diagonalizable, it must be "similar" to a diagonal matrix. Two matrices \(A\) and \(B\) are said to be similar if there exists an invertible matrix \(P\) such that \(B = P^{-1}AP\). When matrix \(A\) is similar to a diagonal matrix \(D\), we can express \(A\) as \(A = PDP^{-1}\). Here, \(D\) contains the eigenvalues of \(A\) on its diagonal, and \(P\) is composed of the corresponding eigenvectors of \(A\).

Applications of Diagonalization

The benefits of diagonalization are vast. In differential equations, a diagonalized matrix simplifies the process of solving systems of linear differential equations. In quantum mechanics, diagonalization is used to find the "energy eigenstates" of a system. In computer graphics, diagonalization can help with transformations and scaling. The simplification that diagonalization offers makes many complex problems tractable.

In essence, diagonalization is more than just a mathematical technique; it is a tool that unveils the inherent simplicity in complex systems, making them understandable and computationally manageable. As we delve deeper into this topic, we'll see how to harness this power and the intricacies involved in the process.

Diagonalizability and Determining Factors

Diagonalizability is a crucial concept in linear algebra. It tells us whether a given matrix can be transformed into a diagonal matrix using a similarity transformation. This section provides an in-depth look at the criteria for diagonalizability and the factors that determine it.

What Does It Mean for a Matrix to be Diagonalizable?

A matrix \( A \) is said to be diagonalizable if there exists an invertible matrix \( P \) and a diagonal matrix \( D \) such that \( A = PDP^{-1} \). In other words, we can express \( A \) in terms of a product of matrices where one of them is diagonal. The matrix \( P \) is formed by the eigenvectors of \( A \), and the diagonal matrix \( D \) contains the corresponding eigenvalues of \( A \) on its main diagonal.

Eigenvalues, Eigenvectors, and Diagonalizability

The eigenvalues and eigenvectors of a matrix are central to the concept of diagonalizability. Here's why:

- Eigenvalues: These are scalar values \( \lambda \) such that \( A\mathbf{v} = \lambda\mathbf{v} \) for some non-zero vector \( \mathbf{v} \). The eigenvalues are found by solving the characteristic polynomial of the matrix, which is given by \( \text{det}(A - \lambda I) = 0 \), where \( I \) is the identity matrix.

- Eigenvectors: For each eigenvalue \( \lambda \), there exists a corresponding eigenvector \( \mathbf{v} \). These vectors form the columns of the matrix \( P \) in the similarity transformation.

- Multiplicity and Diagonalizability: Every eigenvalue has two types of multiplicities: For a matrix to be diagonalizable, the geometric multiplicity of each eigenvalue must equal its algebraic multiplicity. If this condition isn't met for even one eigenvalue, the matrix isn't diagonalizable.

- Algebraic Multiplicity: It is the number of times an eigenvalue appears as a root of the characteristic polynomial.

- Geometric Multiplicity: It is the number of linearly independent eigenvectors corresponding to an eigenvalue. It is determined by the nullity of the matrix \( A - \lambda I \).

Deciding Diagonalizability

To determine if a matrix is diagonalizable:

- Calculate the eigenvalues of the matrix.

- For each eigenvalue, determine its algebraic and geometric multiplicities.

- Check if the geometric multiplicity equals the algebraic multiplicity for all eigenvalues. If so, the matrix is diagonalizable.

A Note on Defective Matrices

When the geometric multiplicity of an eigenvalue is less than its algebraic multiplicity, we encounter what's known as a "defective" matrix. Such matrices are not diagonalizable. They lack the necessary number of linearly independent eigenvectors to form the matrix \( P \).

While many matrices are diagonalizable, not all are, and the key lies in the properties of their eigenvalues and eigenvectors. Understanding these determining factors is essential to harness the power and simplicity that comes with diagonalization.

Handling Repeated Eigenvalues

One of the intriguing aspects of matrix diagonalizability is how it handles matrices with repeated eigenvalues. These are eigenvalues with an algebraic multiplicity greater than one. The challenge arises when trying to ascertain if a matrix with repeated eigenvalues has enough linearly independent eigenvectors to be diagonalizable. Let's delve deeper into this topic.

The Essence of Repeated Eigenvalues

When we say an eigenvalue is "repeated," we're referring to its algebraic multiplicity. For instance, if the characteristic polynomial of a matrix has a factor of \((\lambda - 2)^3\), then the eigenvalue \(2\) has an algebraic multiplicity of \(3\). The matrix may or may not have three linearly independent eigenvectors corresponding to this eigenvalue.

The Crucial Role of Geometric Multiplicity

The geometric multiplicity of an eigenvalue is the number of linearly independent eigenvectors associated with that eigenvalue. This is a crucial determinant of diagonalizability. For a matrix to be diagonalizable:

- The geometric multiplicity of each eigenvalue must be at least \(1\).

- The sum of the geometric multiplicities of all eigenvalues must equal the size (dimension) of the matrix.

When dealing with repeated eigenvalues:

- If the geometric multiplicity of a repeated eigenvalue is less than its algebraic multiplicity, the matrix is not diagonalizable.

- If the geometric multiplicity matches its algebraic multiplicity for every eigenvalue, the matrix is diagonalizable.

Challenges with Repeated Eigenvalues

The complexity arises because it's not guaranteed that a matrix with an eigenvalue of algebraic multiplicity \( k \) will have \( k \) linearly independent eigenvectors. If it does, it's diagonalizable. If not, we encounter a "defective" matrix, which cannot be diagonalized.

Example

Consider a matrix \( A \) for which the eigenvalue \( \lambda = 3 \) has an algebraic multiplicity of \(2\). Two scenarios can arise:

- Diagonalizable: The matrix has two linearly independent eigenvectors corresponding to \( \lambda = 3 \). Hence, it's diagonalizable.

- Not Diagonalizable: The matrix only has one linearly independent eigenvector for \( \lambda = 3 \), despite its algebraic multiplicity being \(2\). In this case, it's not diagonalizable.

Addressing Non-Diagonalizability

For matrices that aren't diagonalizable due to repeated eigenvalues, an alternative form called the Jordan Normal Form can be used. While not diagonal, this form is block-diagonal and retains many of the advantageous properties of diagonal matrices.

Repeated eigenvalues introduce an element of uncertainty regarding diagonalizability, understanding the interplay between algebraic and geometric multiplicities provides clarity. The challenge is not the repetition of the eigenvalue per se but whether there are enough linearly independent eigenvectors associated with that eigenvalue.

Symmetric Matrices and Diagonalizability

Symmetric matrices hold a special place in the pantheon of matrix types due to their distinct properties and wide-ranging applications. These matrices, defined by the property \( A = A^T \), enjoy a guarantee of diagonalizability that other matrix types might not. Let's explore why this is the case and the nuances involved.

Orthogonal Diagonalizability

One of the most remarkable properties of symmetric matrices is that they are not just diagonalizable, but orthogonally diagonalizable. This means that a symmetric matrix \( A \) can be expressed as:

\[

A = PDP^T,

\]

where \( D \) is a diagonal matrix, and \( P \) is an orthogonal matrix (i.e., \( P^T = P^{-1} \)). The columns of \( P \) are the eigenvectors of \( A \), and they are mutually orthogonal and of unit length.

Why are Symmetric Matrices Diagonalizable?

The diagonalizability of symmetric matrices can be attributed to the following properties:

- Real Eigenvalues: All eigenvalues of a symmetric matrix are real numbers. This is a direct consequence of the matrix's symmetry.

- Orthogonal Eigenvectors: Eigenvectors corresponding to distinct eigenvalues of a symmetric matrix are orthogonal to each other. This ensures that we can always find a full set of linearly independent eigenvectors for a symmetric matrix, guaranteeing its diagonalizability.

Spectral Theorem

The properties mentioned above are encapsulated in the Spectral Theorem for symmetric matrices. It states:

Every symmetric matrix is orthogonally diagonalizable.

This theorem provides both a theoretical foundation and a practical tool. In computational contexts, the orthogonal diagonalizability of symmetric matrices simplifies various problems, making algorithms more efficient.

Implications and Applications

Orthogonal diagonalizability has profound implications:

- Simplification: Operations like matrix exponentiation become much simpler when dealing with diagonal matrices. If you have to compute \( A^n \) for some large \( n \) and \( A \) is symmetric, you can instead compute \( D^n \) (which is straightforward) and use the relation \( A^n = PD^nP^T \).

- Stability in Computations: Computations with orthogonal matrices are numerically stable, which is a boon for applications in numerical linear algebra.

- Physical Interpretations: In physics, especially in quantum mechanics, symmetric matrices (often called Hermitian in the complex domain) arise as operators corresponding to observable quantities. Their eigenvectors represent states, and the orthogonal nature of these eigenvectors has physical significance.

Symmetric matrices offer a blend of beauty and utility. Their guaranteed diagonalizability and the orthogonal nature of their eigenvectors make them both mathematically appealing and practically invaluable. Whether in the realm of pure mathematics, computer algorithms, or the laws of quantum mechanics, the properties of symmetric matrices play a pivotal role.

Properties of Diagonalizable Matrices

Diagonalizable matrices, those matrices that can be transformed into a diagonal form through similarity transformations, possess a variety of intriguing and useful properties. Understanding these properties is essential for both theoretical purposes and practical applications. Let's delve into some of these key properties.

1. Powers of Diagonalizable Matrices

If \( A \) is a diagonalizable matrix, then its powers can be easily computed using the diagonal form. Specifically, if \( A = PDP^{-1} \), then for any positive integer \( n \):

\[

A^n = PD^nP^{-1}

\]

This property stems from the fact that raising a diagonal matrix to a power simply involves raising each of its diagonal entries to that power. This makes operations like matrix exponentiation computationally more efficient.

2. Commutativity and Diagonalizability

Two matrices \( A \) and \( B \) are said to commute if \( AB = BA \). If \( A \) and \( B \) are both diagonalizable and they commute, their product \( AB \) is also diagonalizable.

3. Inverse of a Diagonalizable Matrix

If matrix \( A \) is diagonalizable with \( A = PDP^{-1} \) and if \( A \) is invertible, then its inverse is given by:

\[

A^{-1} = PD^{-1}P^{-1}

\]

Here, \( D^{-1} \) is the inverse of the diagonal matrix \( D \), obtained by taking the reciprocal of each non-zero diagonal entry.

4. Diagonalizability is Preserved under Conjugation

If matrix \( A \) is diagonalizable and \( B \) is any invertible matrix, then the conjugate matrix \( B^{-1}AB \) is also diagonalizable.

5. Products and Sums of Diagonalizable Matrices

While the sum or product of two diagonalizable matrices is not necessarily diagonalizable, if two matrices \( A \) and \( B \) are diagonalizable and they commute, their sum \( A + B \) and their product \( AB \) can often be diagonalized simultaneously using the same matrix \( P \).

6. Matrix Polynomials

If \( A \) is a diagonalizable matrix and \( p(t) \) is any polynomial, then the matrix polynomial \( p(A) \) is also diagonalizable. For instance, if \( p(t) = t^2 + 2t + 3 \), then the matrix \( p(A) = A^2 + 2A + 3I \) (where \( I \) is the identity matrix) is diagonalizable.

7. Diagonalizability and Trace & Determinant

The trace (sum of diagonal entries) and determinant of a matrix remain unchanged under similarity transformations. This means the trace and determinant of matrix \( A \) are the same as those of its diagonal form \( D\). This can sometimes offer shortcuts in computations.

Diagonalizable matrices serve as a cornerstone in many areas of linear algebra and its applications. Their properties simplify computations, provide insights into the structure of the matrix, and make them amenable to various mathematical manipulations. Recognizing and understanding these properties can offer both computational advantages and deeper insights into the nature of linear transformations represented by these matrices.

Special Cases: The Zero Matrix

The zero matrix, often denoted as \( \mathbf{0} \) or simply \( 0 \), is a matrix in which all of its entries are zero. It is a unique entity in the world of matrices and has several distinctive properties. When it comes to diagonalizability, the zero matrix presents a straightforward case, but it's worth delving into its characteristics to gain a more profound understanding.

Definition of the Zero Matrix

The zero matrix is defined for any dimension \( m \times n \). For example, a \( 2 \times 2 \) zero matrix is given by:

\[

\mathbf{0} = \begin{bmatrix}

0 & 0 \\

0 & 0 \\

\end{bmatrix}

\]

Diagonalizability of the Zero Matrix

The zero matrix is inherently diagonal because all its off-diagonal elements are already zero. Thus, it is trivially diagonalizable. In the context of the definition \( A = PDP^{-1} \), for the zero matrix:

- \( D \) is itself the zero matrix.

- \( P \) can be any invertible matrix, and the equation will still hold, making the diagonalization non-unique in terms of the matrix \( P \).

Eigenvalues and Eigenvectors of the Zero Matrix

Every eigenvalue of the zero matrix is \( 0 \). This is easy to see because for any non-zero vector \( \mathbf{v} \):

\[

\mathbf{0} \cdot \mathbf{v} = \mathbf{0}

\]

This equation indicates that the vector \( \mathbf{v} \) is an eigenvector corresponding to the eigenvalue \( 0 \). Given that this is true for any non-zero vector \( \mathbf{v} \), the zero matrix has an infinite number of eigenvectors.

Other Properties Related to the Zero Matrix

- Addition: The zero matrix acts as the additive identity in matrix addition. For any matrix \( A \), \( A + \mathbf{0} = A \).

- Multiplication: Multiplying any matrix by the zero matrix yields the zero matrix.

- Invertibility: The zero matrix is singular, meaning it is not invertible. This is because its determinant is zero.

The zero matrix, while seemingly simple, is fundamental in linear algebra. Its inherent diagonal nature means it's automatically diagonalizable, serving as a base or trivial case when discussing diagonalization. Understanding its properties provides clarity when working with more complex matrices and offers insights into the foundational aspects of linear algebra.

Constructing Unique Matrices

In the context of diagonalizability, there are matrices that stand out due to their unique characteristics. These matrices challenge our understanding and are often used as counterexamples to highlight specific properties. One such matrix is an invertible matrix that is not diagonalizable. Let's explore this concept further.

Invertible but not Diagonalizable

While many matrices are both invertible and diagonalizable, the two properties are not inherently linked. It's possible for a matrix to be invertible (non-singular) but not diagonalizable. Such matrices are somewhat rare but extremely valuable in deepening our understanding of diagonalizability.

A Classic Example

Consider the matrix:

\[

A = \begin{bmatrix}

0 & 1 \\

0 & 0 \\

\end{bmatrix}

\]

Eigenvalues and Eigenvectors

The eigenvalues of this matrix are both \( \lambda = 0 \), which means the eigenvalue 0 has an algebraic multiplicity of 2. However, there's only one linearly independent eigenvector associated with this eigenvalue. This discrepancy between algebraic and geometric multiplicities makes the matrix non-diagonalizable.

Invertibility

Despite being non-diagonalizable, the matrix \( A \) is not invertible. Its determinant is zero, and it does not have a full rank. So, while it serves as an example of a non-diagonalizable matrix, it doesn't fit the criteria of being invertible.

Constructing an Invertible, Non-Diagonalizable Matrix

To find a matrix that's both invertible and non-diagonalizable, we can consider Jordan blocks. A classic example is:

\[

B = \begin{bmatrix}

1 & 1 \\

0 & 1 \\

\end{bmatrix}

\]

Eigenvalues and Eigenvectors

For this matrix, the eigenvalue is \( \lambda = 1 \) with an algebraic multiplicity of 2. However, similar to the previous example, there's only one linearly independent eigenvector for this eigenvalue.

Invertibility

The determinant of matrix \( B \) is 1 (non-zero), indicating that it's invertible. Thus, \( B \) is an example of a matrix that's invertible but not diagonalizable.

Implications

These unique matrices serve as reminders that diagonalizability and invertibility are independent properties. While many matrices we encounter in applications might possess both properties, it's crucial to recognize and understand the exceptions.

Positive Semidefinite Matrices

Positive semidefinite matrices play a crucial role in various areas of mathematics and its applications, especially in optimization, statistics, and quantum mechanics. Let's delve deep into their properties, diagonalizability, and their significance.

Definition

A matrix \( A \) is said to be positive semidefinite if it is Hermitian (meaning the matrix is equal to its conjugate transpose, \( A = A^* \)) and all its eigenvalues are non-negative. For real-valued matrices, being Hermitian means the matrix is symmetric, \( A = A^T \).

More formally, a matrix \( A \) is positive semidefinite if for any vector \( x \) (non-zero),

\[

x^* Ax \geq 0

\]

where \( x^* \) denotes the conjugate transpose (or simply the transpose for real-valued vectors) of \( x \).

Diagonalizability

One of the key properties of positive semidefinite matrices is their diagonalizability:

- Eigenvalues: Since a positive semidefinite matrix is Hermitian, all its eigenvalues are real. Furthermore, by definition, these eigenvalues are non-negative.

- Orthogonal Eigenvectors: The eigenvectors corresponding to distinct eigenvalues of a Hermitian matrix are orthogonal. Hence, for any positive semidefinite matrix, there exists an orthonormal basis of eigenvectors.

- Orthogonal Diagonalizability: Given the above properties, a positive semidefinite matrix \( A \) can be orthogonally diagonalized as \( A = PDP^* \) (or \ A = PDP^T \) for real matrices), where \( D \) is a diagonal matrix containing the eigenvalues of \( A \), and \( P \) is a unitary (or orthogonal) matrix whose columns are the eigenvectors of \( A \).

Significance and Applications

Positive semidefinite matrices are pervasive in various fields:

- Optimization: Quadratic programming problems often involve constraints defined by positive semidefinite matrices, ensuring the quadratic form is convex.

- Statistics: In statistics, the covariance matrix of any random vector is positive semidefinite. This ensures that variances (diagonal elements) are non-negative and the matrix captures a valid covariance structure.

- Quantum Mechanics: In quantum mechanics, density matrices representing quantum states are positive semidefinite.

- Machine Learning: Kernel methods, especially in support vector machines, use positive semidefinite matrices (kernels) to capture similarities between data points.

Key Properties

- Matrix Products: The product of a matrix and its transpose (or conjugate transpose) is always positive semidefinite. That is, for any matrix \( M \), the matrix \( MM^* \) (or \( MM^T \) for real matrices) is positive semidefinite.

- Sum of Positive Semidefinite Matrices: The sum of two positive semidefinite matrices is also positive semidefinite.

- Determinant and Trace: The determinant and trace of a positive semidefinite matrix are always non-negative.

Positive semidefinite matrices, with their guarantee of diagonalizability and their rich set of properties, are foundational in many mathematical areas and applications. Understanding their characteristics and behavior is essential for anyone delving into optimization, statistics, quantum mechanics, and various other fields.

Diagonalizing Using Eigenvectors

Diagonalization using eigenvectors is one of the fundamental processes in linear algebra. The eigenvectors and eigenvalues of a matrix provide insights into its structure and behavior. When a matrix is diagonalizable, its eigenvectors offer a pathway to simplify it into a diagonal form, making further analyses and computations more tractable. Let's dive deeper into this process.

The Essence of Diagonalization

To diagonalize a matrix using its eigenvectors means to express the matrix as a product of three matrices: one formed by its eigenvectors, a diagonal matrix, and the inverse of the matrix of eigenvectors. Specifically, if \( A \) is a diagonalizable matrix, then:

\[

A = PDP^{-1}

\]

where:

- \( P \) is the matrix whose columns are the eigenvectors of \( A \).

- \( D \) is a diagonal matrix whose diagonal entries are the corresponding eigenvalues of \( A \).

The Process of Diagonalization

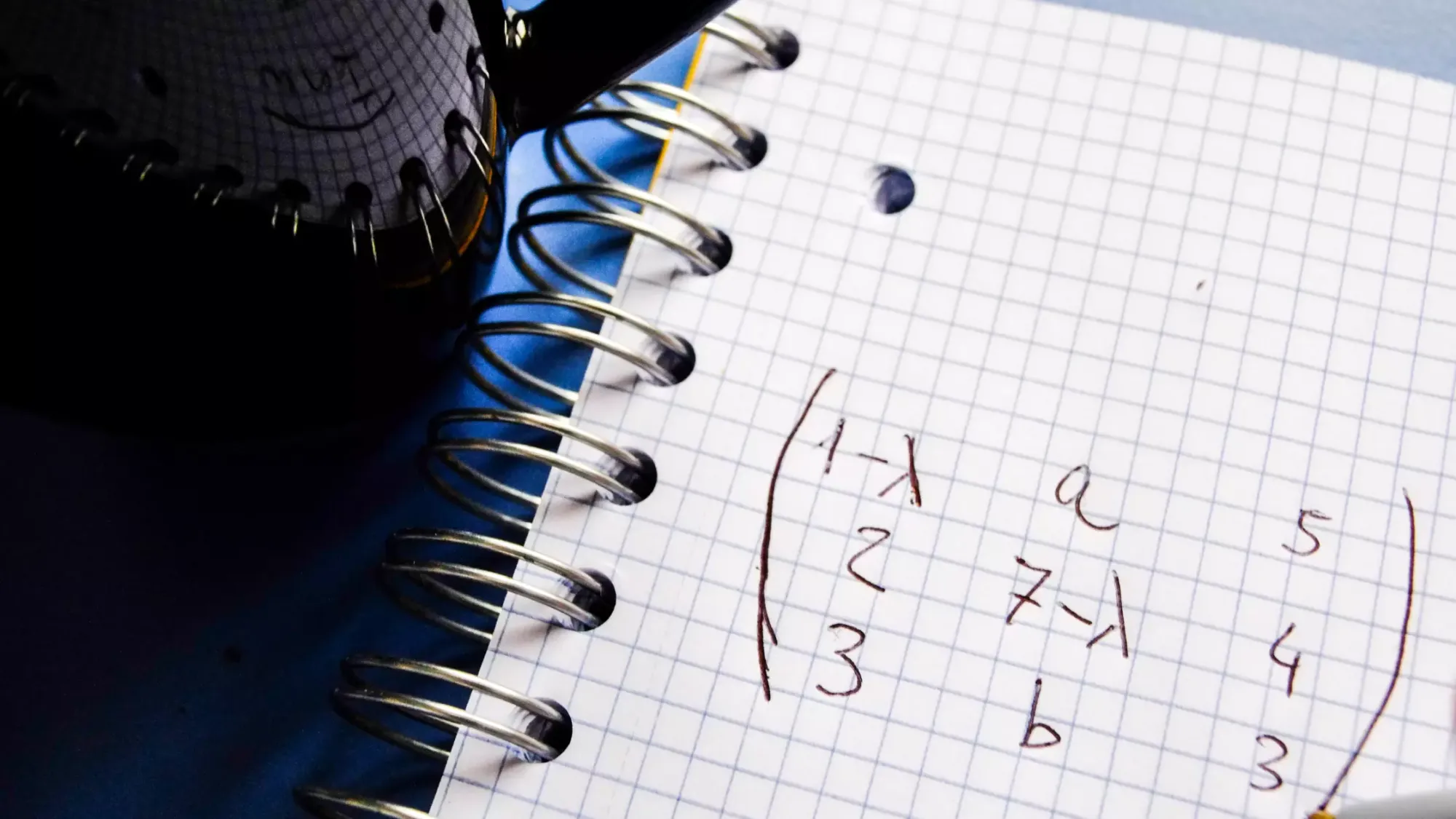

- Find the Eigenvalues: The eigenvalues of matrix \( A \) are the solutions to its characteristic polynomial, given by:

\[

\text{det}(A - \lambda I) = 0,

\]

where \( \lambda \) represents the eigenvalues and \( I \) is the identity matrix.

- Find the Eigenvectors: For each eigenvalue \( \lambda \), solve the equation:

\[

(A - \lambda I) \mathbf{v} = \mathbf{0}

\]

to find its corresponding eigenvectors. The vector \( \mathbf{v} \) is an eigenvector associated with the eigenvalue \( \lambda \).

- Form the Matrix \( P \): Once all eigenvectors are determined, place them as columns in matrix \( P \).

- Construct the Diagonal Matrix \( D \): Place the eigenvalues of \( A \) in the same order as their corresponding eigenvectors in \( P \) on the diagonal of matrix \( D \).

- Verify the Diagonalization: Multiply the matrices \( P \), \( D \), and \( P^{-1} \) in order to verify that their product is equal to matrix \( A \).

Important Points to Note

- Diagonalizability: Not all matrices are diagonalizable. A matrix is diagonalizable if and only if it has enough linearly independent eigenvectors to form the matrix \( P \). This is equivalent to saying that the sum of the geometric multiplicities of all its eigenvalues equals the size of the matrix.

- Distinct Eigenvalues: If a matrix has \( n \) distinct eigenvalues (for an \( n \times n \) matrix), then it is guaranteed to be diagonalizable. Each distinct eigenvalue provides a linearly independent eigenvector.

- Repeated Eigenvalues: In cases where eigenvalues are repeated, it's crucial to ensure that there are enough linearly independent eigenvectors corresponding to each repeated eigenvalue. The algebraic multiplicity (number of times an eigenvalue is repeated) and the geometric multiplicity (number of linearly independent eigenvectors for that eigenvalue) must be equal for diagonalizability.

Diagonalizing a matrix using its eigenvectors is a powerful tool that transforms the matrix into a simpler form, revealing its intrinsic properties. This process is central to many applications, from solving differential equations to quantum mechanics. Understanding the underlying principles and steps ensures that we can harness the full potential of diagonalization in various mathematical and practical scenarios.

Diagonalizing Without Eigenvectors

While eigenvectors and eigenvalues provide a direct path to diagonalizing matrices, there are situations where we might want to consider alternative methods, especially when dealing with matrices that are not diagonalizable in the traditional sense. Let's delve into some of these alternative approaches and the contexts in which they're useful.

1. Jordan Normal Form

For matrices that aren't diagonalizable due to insufficient independent eigenvectors, the Jordan Normal Form (JNF) offers a way to represent them in a nearly-diagonal form. While not strictly diagonal, the JNF is block-diagonal, with each block corresponding to an eigenvalue.

The process involves forming Jordan chains, which are sets of generalized eigenvectors, and then constructing the Jordan blocks. The JNF is especially useful because it retains many of the convenient properties of diagonal matrices, making it a valuable tool in various mathematical analyses.

2. Schur Decomposition

Another method to represent a matrix in a nearly-diagonal form is the Schur decomposition. Every square matrix \( A \) can be expressed as:

\[

A = Q T Q^*

\]

where:

- \( Q \) is a unitary matrix (its columns form an orthonormal basis).

- \( T \) is an upper triangular matrix whose diagonal entries are the eigenvalues of \( A \).

The Schur decomposition is particularly useful in numerical algorithms and linear algebraic analyses.

3. Singular Value Decomposition (SVD)

SVD is a powerful matrix factorization technique that diagonalizes a matrix using singular values instead of eigenvalues. Given a matrix \( A \), its SVD is:

\[

A = U \Sigma V^*,

\]

where:

- \( U \) and \( V \) are unitary matrices.

- \( \Sigma \) is a diagonal matrix containing the singular values of \( A \), which are non-negative and typically arranged in decreasing order.

SVD is used extensively in applications like data compression, image processing, and principal component analysis.

4. QR Algorithm

The QR algorithm is an iterative method used to find the eigenvalues of a matrix. While it doesn't directly diagonalize the matrix without using eigenvectors, it does transform the matrix closer to a triangular form (and potentially diagonal form for some matrices) from which the eigenvalues can be easily read off.

The method involves repeatedly applying QR factorization and then forming a new matrix from the product of the factors, gradually driving the matrix towards a triangular form.

While eigenvectors offer a direct route to diagonalization, it's essential to recognize that there are alternative methods and forms that can provide insights into the structure and properties of matrices. These alternatives are especially valuable when dealing with matrices that aren't traditionally diagonalizable or when specific applications demand a different form or decomposition. Understanding these techniques broadens our toolbox in linear algebra and equips us to tackle a wider range of mathematical challenges.

Step-by-Step Diagonalization Examples

Diagonalizing matrices can be better understood through hands-on examples. Here, we'll walk through the diagonalization process for both a \(2 \times 2\) and a \(3 \times 3\) matrix.

Diagonalizing a \(2 \times 2\) Matrix

Let's consider the matrix:

\[

A = \begin{bmatrix}

3 & 2 \\

1 & 4 \\

\end{bmatrix}

\]

1. Determine the Eigenvalues

To find the eigenvalues, we solve the characteristic equation:

\[

\text{det}(A - \lambda I) = 0

\]

For our matrix, this equation becomes:

\[

\text{det} \left( \begin{bmatrix}

3 - \lambda & 2 \\

1 & 4 - \lambda \\

\end{bmatrix} \right) = 0

\]

2. Determine the Eigenvectors

For each eigenvalue, we'll solve the equation:

\[

(A - \lambda I) \mathbf{v} = \mathbf{0}

\]

to determine its corresponding eigenvector(s).

Eigenvalues:

- \( \lambda_1 = 2 \)

- \( \lambda_2 = 5 \)

Eigenvectors:

- Corresponding to \( \lambda_1 \): \(\begin{bmatrix} -0.8944 \\ 0.4472 \end{bmatrix}\)

- Corresponding to \( \lambda_2 \): \(\begin{bmatrix} -0.7071 \\ -0.7071 \end{bmatrix}\)

3. Construct the Diagonal Matrix

The diagonal matrix \(D\) will have the eigenvalues on its diagonal.

Matrix \( D \) is a diagonal matrix with eigenvalues: \(\begin{bmatrix} 2 & 0 \\ 0 & 5 \end{bmatrix}\)

4. Construct the Matrix \(P\)

The matrix \(P\) will have the eigenvectors as its columns.

Matrix \( P \) is formed by placing the eigenvectors as columns: \(\begin{bmatrix} -0.8944 & -0.7071 \\ 0.4472 & -0.7071 \end{bmatrix}\)

5. Verify the Diagonalization

Ensure that \( A = PDP^{-1} \).

Diagonalizing a \(3 \times 3\) Matrix

Consider the matrix:

\[

B = \begin{bmatrix}

6 & 2 & 1 \\

2 & 3 & 1 \\

1 & 1 & 5 \\

\end{bmatrix}

\]

1. Determine the Eigenvalues

We solve the characteristic equation:

\[

\text{det}(B - \lambda I) = 0

\]

2. Determine the Eigenvectors

For each eigenvalue, we solve:

\[

(B - \lambda I) \mathbf{v} = \mathbf{0}

\]

Eigenvalues:

- \( \lambda_1 = 7.6805 \)

- \( \lambda_2 = 4.3931 \)

- \( \lambda_3 = 1.9264 \)

Eigenvectors:

- Corresponding to \( \lambda_1 \): \(\begin{bmatrix} -0.7813 \\ -0.4304 \\ -0.4520 \end{bmatrix}\)

- Corresponding to \( \lambda_2 \): \(\begin{bmatrix} -0.4771 \\ -0.0553 \\ 0.8771 \end{bmatrix}\)

- Corresponding to \( \lambda_3 \): \(\begin{bmatrix} 0.4025 \\ -0.9009 \\ 0.1622 \end{bmatrix}\)

3. Construct the Diagonal Matrix

The diagonal matrix \(D\) will contain the eigenvalues on its diagonal.

\[

\begin{bmatrix}

7.6805 & 0 & 0 \\

0 & 4.3931 & 0 \\

0 & 0 & 1.9264

\end{bmatrix}

\]

4. Construct the Matrix P

The matrix \(P\) will have the eigenvectors as its columns.

\[

\begin{bmatrix}

-0.7813 & -0.4771 & 0.4025 \\

-0.4304 & -0.0553 & -0.9009 \\

-0.4520 & 0.8771 & 0.1622

\end{bmatrix}

\]

5. Verify the Diagonalization

Ensure that \( B = PDP^{-1} \).

This completes the diagonalization process for both matrices. You can now use the matrices \( P \) and \( D \) to represent the original matrices \( A \) and \( B \) in the form \( A = PDP^{-1} \) and \( B = PDP^{-1}\) respectively.

Wrapping Up

Matrix diagonalization is a central concept in linear algebra that offers profound insights into the nature of matrices and linear transformations. Through the process of diagonalization, we can express matrices in a simpler, more tractable form, making various mathematical analyses and computations more efficient. Key to this process are the eigenvalues and eigenvectors of a matrix, which serve as the primary tools for traditional diagonalization. However, it's essential to note that not all matrices are diagonalizable in the conventional sense. For such cases, alternative methods like the Jordan Normal Form and the Schur decomposition offer nearly-diagonal representations, highlighting the diversity and richness of matrix structures.

Furthermore, the exploration of special matrix classes, such as symmetric matrices and positive semidefinite matrices, reveals guaranteed diagonalizability and other intriguing properties. These matrices not only deepen our understanding of diagonalization but also find applications in various scientific and engineering domains. From understanding the behavior of quantum systems to optimizing complex functions, the principles and techniques of diagonalization underpin many areas of study. As we've seen, the world of matrices is vast and nuanced, and understanding diagonalization is paramount to unlocking its myriad mysteries and potentials.